Code: Select all

Const C_maxInputs = 5000,C_maxNPL = 500,C_maxLayers = 5

Const C_maxNet = 16'50000 'Max number of neurons any single neruon can be linked to(On a per neuron basis, so 2 neurons is limit*2 and so on, up to n(Inifinity)

Const nBias = - 1 'Do not change!(cue everyone changing it, crashing their machines..;->

Const C_sypAct = 1 'Activation value. Change to suit your needs.

Const V_maxVec = 500

Const c_maxHis = 500

Type vector

size as integer

v(V_maxVec) as single

End Type

Type neuron

numNet as integer

weight(C_maxNet+1) as single

net (C_maxNet) as neuron ptr

End Type

Type nLayer

neuron(C_maxNPL) as neuron ptr

numNeurons as integer

numIn as integer

End Type

Type neuralNet

numInputs as integer

numOutputs as integer

numLayers as integer

numNPL as integer

nLayer(C_maxLayers) as nLayer ptr

End Type

Dim shared vTmp as vector ptr'IO vector used by neural nets

Dim shared as neuron ptr nFire(c_maxHis)

Dim shared as neuron ptr nNull(c_maxHis) 'For the learning, history of a cycles, null and fired neurons.

sub initFFnet()

vTmp = New vector

End sub

Function neuron(numNet as integer) as neuron ptr

dim as neuron ptr neur = New neuron

For i as integer= 1 To numNet + 1

neur->weight(i) = Rnd(1)*.4

Next

neur->weight(C_maxNet+1) = Rnd(1)*.6

Return neur

End Function

Function nLayer(numNeurons as integer,numIn as integer) as nLayer ptr

dim as nLayer ptr nLay = New nLayer

nLay->numNeurons = numNeurons

nLay->numIn = numIn

For n as integer=1 To numNeurons

nLay->neuron(n) = neuron(numIn)

Next

Return nLay

End Function

sub linkLayers(l1 as nLayer ptr,l2 as nLayer ptr,chains as integer = 0, preser as integer = 1) '1> 2<> 3<

For n as integer= 1 To l1->numNeurons

If preser=0 then

l1->neuron(n)->numNet=0

End If

For t as integer= 1 To l2->numNeurons

l1->neuron(n)->net(t+l1->neuron(n)->numNet) = l2->neuron(t)

Next

l1->neuron(n)->numNet = l1->neuron(n)->numNet+l2->numNeurons

Next

If chains<>0 then linkLayers(l2,l1,0,preser)

End sub 'To double chain two layers, set chain to true

Function neuralNet(numInputs as integer,numHidden as integer,numOutputs as integer,populate as integer= 1,initVecTmp as integer = 1) as neuralNet ptr

dim as neuralNet ptr outp = New neuralNet

outp->numInputs = numInputs

outp->numOutputs = numOutputs

outp->numLayers = numHidden

If populate then

outp->nLayer(1) = nLayer(numInputs,numInputs)

outp->nLayer(2) = nLayer(numHidden,numInputs)

outp->nLayer(3) = nLayer(numOutputs,numHidden)

linkLayers(outp->nLayer(1),outp->nLayer(2),1)

linkLayers(outp->nLayer(2),outp->nLayer(3),1,1)

End If

Return outp

End Function

sub punishNet()

If c_maxHis=0 then End

For j as integer = 1 To c_maxHis

If nFire(j) <>0 then

For n as integer = 1 To c_maxNet

nFire(j)->weight(n) = nFire(j)->weight(n) - rnd(1)

Next

EndIf

If nNull(j) <>0 then

For n as integer = 1 To c_maxNet

nNull(j)->weight(n) = nNull(j)->weight(n) + rnd(1)

Next

EndIf

Next

End Sub

sub rewardNet()

If c_maxHis=0 then End

For j as integer = 1 To c_maxHis

If nFire(j) <>0 then

For n as integer = 1 To c_maxNet

nFire(j)->weight(n) = nFire(j)->weight(n) + 0.05

Next

EndIf

If nNull(j) <>0 then

For n as integer = 1 To c_maxNet

nNull(j)->weight(n) = nNull(j)->weight(n) - 0.02

Next

EndIf

Next

End Sub

sub clearHistory()

For j as integer = 1 To c_maxHis

nFire(j) = 0

nNull(j) = 0

Next

End sub

sub pushVector(in as vector ptr,v as single)

If in->size < V_maxVec then in->size=in->size+1 Else exit sub

If in->Size > 1 then

For i as integer=in->size-1 To 1 Step - 1

in->v(i+1)=in->v(i)

Next

EndIf

in->v(1) = v

End sub

Function sigmoid(in as single,round as single) as single

Return ( 1. / (1. + Exp(-in / round)))

End Function

sub FFnetCycle(in as neuralNet ptr) ' input->[?> hidden ?> output >]->user/GA

dim tWeight as single

clearHistory

For layer as integer = 1 To 3

For i as integer = 1 To in->nLayer(layer)->numNeurons

tWeight = 0

For n as integer = 1 To in->nLayer(layer)->numIn

tWeight = tWeight + (vTmp->v(n) * in->nLayer(layer)->neuron(i)->weight(n))

Next

tWeight = tWeight + (in->nLayer(layer)->neuron(i)->weight(c_maxNet+1)) * nBias

pushVector(vTmp,sigmoid(tWeight,C_sypAct))

If vTmp->v(1) > in->nLayer(layer)->neuron(i)->weight(C_maxNet+1) then

For j as integer = 1 To c_maxHis

If nFire(j) = 0 then

nFire(j) = in->nLayer(layer)->neuron(i)

Exit for

End If

Next

Else

For j as integer= 1 To c_maxHis

If nNull(j) = 0 then

nNull(j) = in->nLayer(layer)->neuron(i)

Exit for

End If

Next

End If

Next

Next

End sub

sub setInput(i as integer,v as single)

If i>vTmp->size then vTmp->size = i

vTmp->v(i) = v

End sub

Function getInput(i as integer)as single

Return vTmp->v(i)

End Function

sub clearNetIO() 'needed after every cycle's results/inputs are not needed.

vTmp->size = 0

vTmp->v(1) = 0

End sub

Function getOutput(net as neuralNet ptr,n as integer,sum as integer = 0) as integer

'If sum=0 then Return int(.5+ net->nLayer(3)->neuron(n)->weight(C_maxNet+1))

For j as integer = 1 To c_maxHis

If nFire(j) = net->nLayer(3)->neuron(n) then return 1

If nNull(j) = net->nLayer(3)->neuron(n) then return 0

Next

return 0

End Function

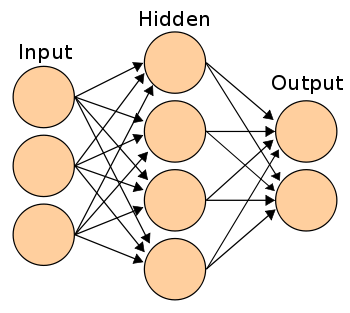

A neural net is a system that can learn through virtual "neurons".

It's possible to define a network with many variable as inputs; the network will return some bits as outputs. At first, it will return random results, but it's possible to train it (by "rewarding" and "punishing" it) to return correct results. The net will learn.

Here is a sample (with two inputs, and two outputs)

Code: Select all

initFFnet

dim shared neur as neuralNet ptr

neur=neuralNet(2,8,2)

dim shared as integer val_out

dim i as single

dim c as string

dim e as integer

do

input i,e

clearNetIO

setinput(1,i)

setinput(2,e)

FFnetCycle(neur)

? getOutput(neur,1), getOutput(neur,2)

print "is this correct?":c=ucase(input(1))

If c<>"Y" Then punishnet():?"No" else rewardnet:?"Yes"

loopCode: Select all

for x as integer=0 to 100

if rnd(1)>.2 then i=1-i

if rnd(1)>.2 then e=1-e

clearNetIO

setinput(1,i)

setinput(2,e)

FFnetCycle(neur)

if getOutput(neur,1)=e and getOutput(neur,2) =i then c="Y" else c="N"

If c<>"Y" Then punishnet():x=0 else rewardnet

next

Neural networks are used for many tasks, such as OCR or speech recognition.